Full AI Observability: The Control Plane for Autonomous Enterprise Agents

Part 3 of 3 in a series on the essentials of an enterprise ready AI solution

Full AI Observability: the control plane for autonomous enterprise agents

AI agents won’t remain proofs of concept for long. They are already drafting contracts, reconciling invoices, answering customer questions, writing code, and triggering workflows that change real systems. As they become common and pervasive in every business, these agents will perform work once reserved for humans, exhibit elements of critical thinking, and take autonomous actions in complex environments. That combination—autonomy + action + access—creates a new risk surface that traditional monitoring and governance can’t handle. The answer is full AI observability: the ability to see, understand, and control every step of an agent’s lifecycle and its interaction with sensitive business data.

The new threat model

Today’s security and compliance stacks were designed around people and deterministic software. Agents break those assumptions:

- Anyone can run them. A junior analyst, a contractor, or an internal app can spin up an agent with minimal understanding of how it actually accomplishes a task.

- They reason and act. Agents plan, call tools, write back to systems, and iterate. Their behavior depends on context (prompts, retrieved knowledge, tool results) that shifts second to second.

- They touch your crown jewels. Agents read and transform the most sensitive enterprise data—payroll tables, PII, customer records, IP, financial forecasts—often across multiple systems.

- They are attackable in novel ways. Prompt injection, tool hijacking, and indirect attacks via data sources or third‑party apps can redirect agent behavior without exploiting a “bug” in the traditional sense.

This is a paradigm shift. The priority is not just detecting failure after the fact; it’s creating a runtime where the only actions an agent can take are demonstrably safe and within policy, and where every action is explainable and auditable.

What “full AI observability” really means

Monitoring tells you that something went wrong. Observability tells you why, and—critically for agents—prevents the wrong thing from happening in the first place. For AI agents, full observability spans five layers:

- Identity & intent. Who (human, service, or another agent) initiated the request? What was the declared purpose? What data and tools were requested, and why?

- Perception & retrieval. What inputs (prompts, documents, embeddings, features) shaped the agent’s context? Which records were retrieved or filtered out?

- Deliberation & planning (summarized). What high‑level plan did the agent form? Which policies or constraints influenced that plan? (Capture structured, policy‑aware summaries and citations rather than raw private reasoning.)

- Action execution. Which tools, functions, or transactions did the agent call? With what parameters? What data was read, written, or moved?

- Outcomes & impact. What changed in the real world? Was the result correct, compliant, timely, and cost‑efficient? Can we replay it, explain it, or roll it back?

Non‑negotiable capabilities

To implement those layers, enterprises need the following capabilities wired into the agent runtime—not bolted on after deployment:

- Purpose‑based access control. Bind access to a declared purpose (e.g., “resolve ticket #123”) and time‑box it. No open‑ended credentials.

- Data control plane. Centralize data inventory, lineage, and fine‑grained entitlements (table/column/row/cell). Enforce dynamic masking, PII detection, tokenization, and just‑in‑time credentials at query time.

- Action control plane. Register every tool/function an agent can call with explicit scopes, rate limits, preconditions, and compensating actions (e.g., dry‑run, approval required, or reversible write). Provide kill switches at the principal, tool, and workflow level.

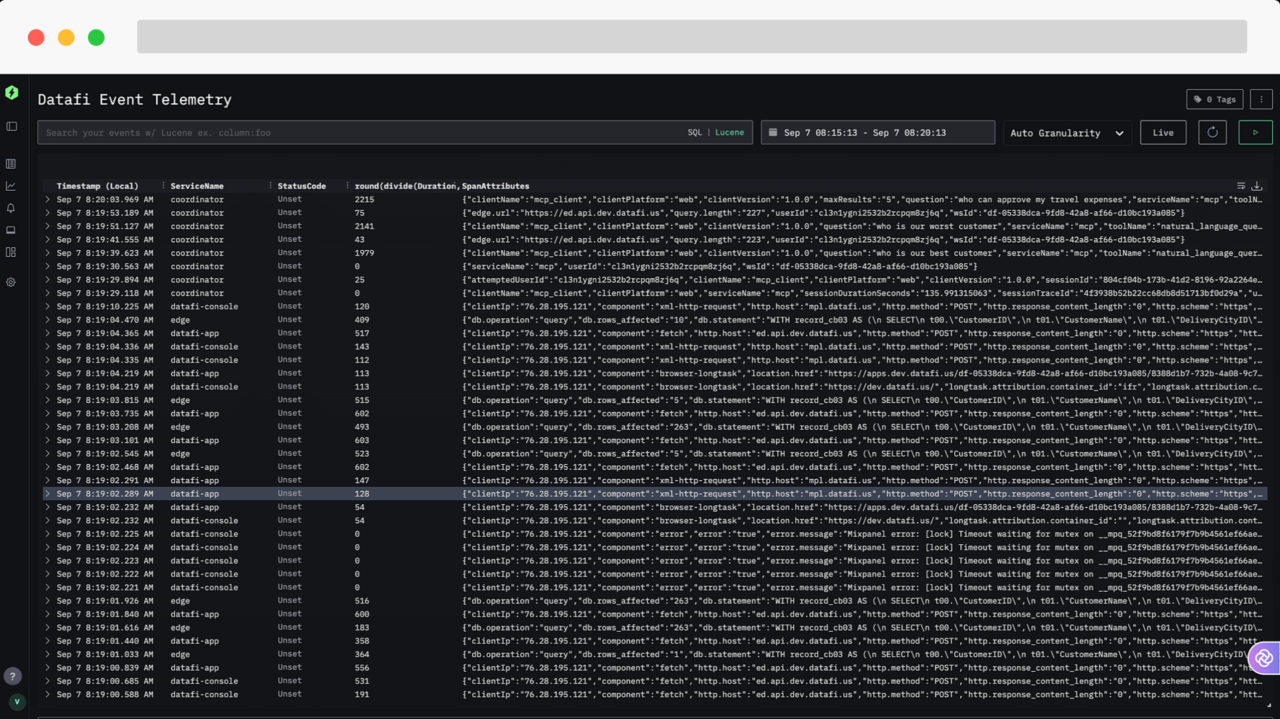

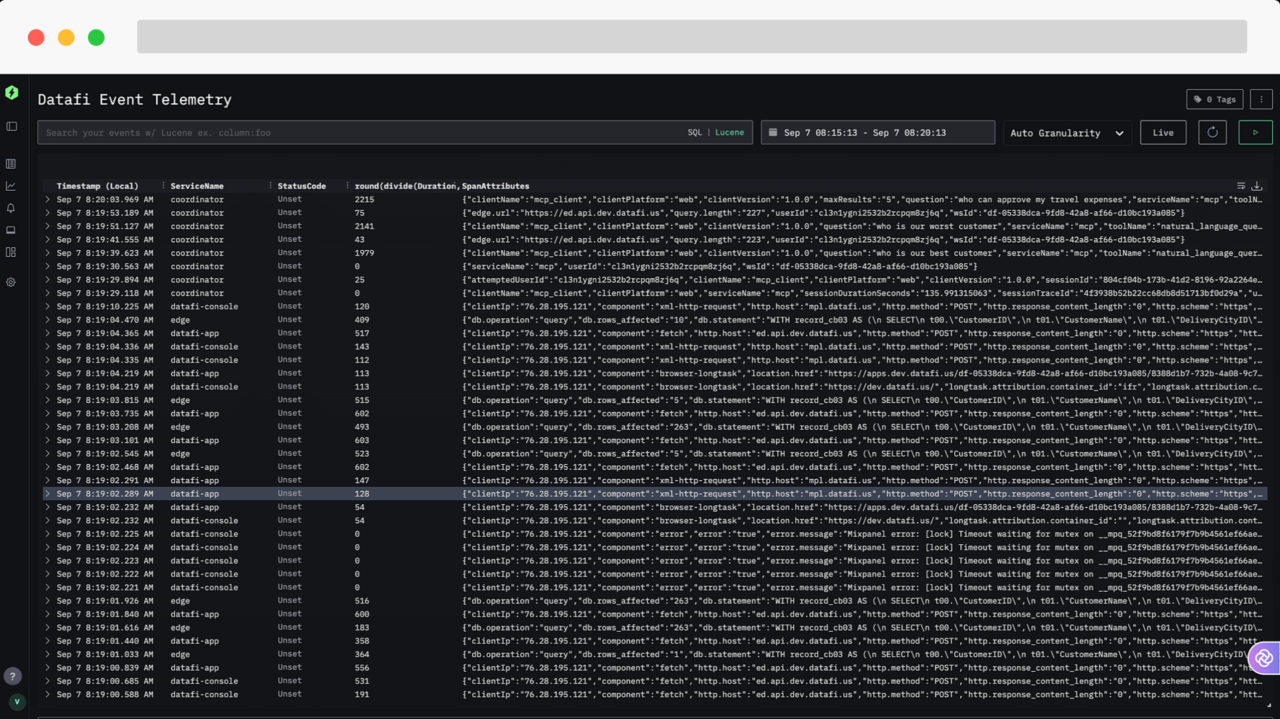

- Distributed tracing for AI. Correlate prompts, retrievals, tool calls, and system writes via a single trace ID. Include inputs, outputs, durations, costs, and policy decisions.

- Policy as code. Express authorization, purpose, data residency, and safety rules in versioned code (e.g., OPA/Rego‑style). Evaluate policies at decision time and at action time.

- Grounding & validation. Require evidence for claims (citations to retrieved sources), detect hallucination risks, and validate outputs against schemas and business rules before acting.

- Risk analytics. Score each request and action using anomaly detection (unusual joins, large result sets, atypical tools, sensitive fields). Escalate to human approval when thresholds are exceeded.

- Tamper‑evident audit & replay. Write an immutable, queryable ledger of events for compliance, incident response, and training data curation. Support time‑travel replay in “shadow mode.”

- Human‑in‑the‑loop. Make approvals ergonomic: one‑click consent with context (who, what, why, evidence), SLAs, and auto‑fallback if unattended.

- Environment separation. Sandbox new agents, run “canary” sessions, and promote to production only with defined quality gates and safety test suites.

A practical architecture: signal, policy, and control

A robust pattern is to split the system into three cooperating planes:

- Signal plane (observability). Collect high‑fidelity telemetry from the agent framework, RAG layer, databases, data lakes, SaaS tools, and custom APIs. Normalize around a common schema (trace IDs, principals, resource URIs, sensitivity tags).

- Policy plane (decisions). Evaluate identity, purpose, contextual risk, and data/tool policies to yield allow/deny/approve with conditions. Log the decision and rationale.

- Control plane (enforcement). Enforce the decision at the data and action boundaries—rewriting queries, masking columns, constraining parameters, inserting approvals, or blocking outright.

Done right, these planes form a closed loop: observe → decide → enforce → observe the outcome. Over time, this loop produces the institutional memory to make agents both safer and more effective.

Where Datafi fits

Datafi was built on the premise that business information must be easier to find and safely use. In an agent‑first world, that translates to an enterprise data control plane coupled with action‑aware governance:

- Unified data visibility. Catalog sources, sensitivity, lineage, and usage so agents can request only what they truly need.

- Fine‑grained policy enforcement. Apply column/row‑level controls, dynamic masking, and purpose‑based access at runtime—no manual rewiring per agent.

- Context‑aware query rewriting. Translate natural‑language intents into governed queries that automatically respect entitlements and data residency.

- Action governance. Register tool/function capabilities with scopes, preconditions, and guardrails. Provide dry‑run and “shadow mode” to evaluate planned actions before they touch production.

- Evidence‑first responses. Require citations and schema validation, attach provenance to outputs, and store everything in an immutable audit ledger for replay and post‑mortems.

- Least‑privilege by default. Issue ephemeral credentials bound to identity, purpose, and time so even a compromised agent session has constrained blast radius.

The result is not just visibility into what agents did, but proactive control over what they can do with sensitive business data—exactly what enterprises need to move from pilots to production with confidence.

An adoption path you can start today

- Inventory & tag. Establish a living catalog of data sources, sensitivity levels, and owners. Tie datasets to business purposes.

- Instrument the runtime. Add tracing at the prompt, retrieval, tool, and data layers. Adopt a shared correlation ID.

- Codify policies. Write purpose‑based access rules, data masking patterns, and action preconditions as code. Version and test them.

- Introduce the control plane. Put Datafi (or an equivalent control gateway) in the data path and tool path. Start with read‑only controls and dry‑runs.

- Run in shadow mode. Compare agent plans and proposed actions with human ground truth; measure drift, hallucination risk, and data exposure.

- Turn on enforcement. Require approvals for high‑risk actions, then progressively relax as confidence grows. Keep reversible writes until error rates meet targets.

- Continuously learn. Feed incidents, user feedback, and success/failure signals back into policies, prompts, retrieval rules, and tool scopes.

Metrics that matter

Define success with concrete, decision‑centric measures:

- Mean time to explain (MTTX) any agent action

- % actions executed with attached evidence/citations

- Hallucination/validation failure rate prior to enforcement

- Sensitive data exposure averted (blocked or masked)

- Approval dwell time for high‑risk actions

- Cost per correct decision (compute + human time)

- Replay coverage: % of traces reproducible end‑to‑end

The bottom line

Autonomous agents will unlock enormous productivity, but autonomy without observability is recklessness at scale. Full AI observability—grounded in a data control plane, action governance, and policy‑as‑code—turns agents from opaque black boxes into trustworthy coworkers that are explainable, auditable, and controllable. By placing Datafi’s control plane between agents and your most sensitive systems, you gain the power not only to see what agents do with your business data, but to shape it—ensuring that the right things happen, every time.

Full AI Observability: The Control Plane for Autonomous Enterprise Agents

Part 3 of 3 in a series on the essentials of an enterprise ready AI solution

Full AI Observability: the control plane for autonomous enterprise agents

AI agents won’t remain proofs of concept for long. They are already drafting contracts, reconciling invoices, answering customer questions, writing code, and triggering workflows that change real systems. As they become common and pervasive in every business, these agents will perform work once reserved for humans, exhibit elements of critical thinking, and take autonomous actions in complex environments. That combination—autonomy + action + access—creates a new risk surface that traditional monitoring and governance can’t handle. The answer is full AI observability: the ability to see, understand, and control every step of an agent’s lifecycle and its interaction with sensitive business data.

The new threat model

Today’s security and compliance stacks were designed around people and deterministic software. Agents break those assumptions:

- Anyone can run them. A junior analyst, a contractor, or an internal app can spin up an agent with minimal understanding of how it actually accomplishes a task.

- They reason and act. Agents plan, call tools, write back to systems, and iterate. Their behavior depends on context (prompts, retrieved knowledge, tool results) that shifts second to second.

- They touch your crown jewels. Agents read and transform the most sensitive enterprise data—payroll tables, PII, customer records, IP, financial forecasts—often across multiple systems.

- They are attackable in novel ways. Prompt injection, tool hijacking, and indirect attacks via data sources or third‑party apps can redirect agent behavior without exploiting a “bug” in the traditional sense.

This is a paradigm shift. The priority is not just detecting failure after the fact; it’s creating a runtime where the only actions an agent can take are demonstrably safe and within policy, and where every action is explainable and auditable.

What “full AI observability” really means

Monitoring tells you that something went wrong. Observability tells you why, and—critically for agents—prevents the wrong thing from happening in the first place. For AI agents, full observability spans five layers:

- Identity & intent. Who (human, service, or another agent) initiated the request? What was the declared purpose? What data and tools were requested, and why?

- Perception & retrieval. What inputs (prompts, documents, embeddings, features) shaped the agent’s context? Which records were retrieved or filtered out?

- Deliberation & planning (summarized). What high‑level plan did the agent form? Which policies or constraints influenced that plan? (Capture structured, policy‑aware summaries and citations rather than raw private reasoning.)

- Action execution. Which tools, functions, or transactions did the agent call? With what parameters? What data was read, written, or moved?

- Outcomes & impact. What changed in the real world? Was the result correct, compliant, timely, and cost‑efficient? Can we replay it, explain it, or roll it back?

Non‑negotiable capabilities

To implement those layers, enterprises need the following capabilities wired into the agent runtime—not bolted on after deployment:

- Purpose‑based access control. Bind access to a declared purpose (e.g., “resolve ticket #123”) and time‑box it. No open‑ended credentials.

- Data control plane. Centralize data inventory, lineage, and fine‑grained entitlements (table/column/row/cell). Enforce dynamic masking, PII detection, tokenization, and just‑in‑time credentials at query time.

- Action control plane. Register every tool/function an agent can call with explicit scopes, rate limits, preconditions, and compensating actions (e.g., dry‑run, approval required, or reversible write). Provide kill switches at the principal, tool, and workflow level.

- Distributed tracing for AI. Correlate prompts, retrievals, tool calls, and system writes via a single trace ID. Include inputs, outputs, durations, costs, and policy decisions.

- Policy as code. Express authorization, purpose, data residency, and safety rules in versioned code (e.g., OPA/Rego‑style). Evaluate policies at decision time and at action time.

- Grounding & validation. Require evidence for claims (citations to retrieved sources), detect hallucination risks, and validate outputs against schemas and business rules before acting.

- Risk analytics. Score each request and action using anomaly detection (unusual joins, large result sets, atypical tools, sensitive fields). Escalate to human approval when thresholds are exceeded.

- Tamper‑evident audit & replay. Write an immutable, queryable ledger of events for compliance, incident response, and training data curation. Support time‑travel replay in “shadow mode.”

- Human‑in‑the‑loop. Make approvals ergonomic: one‑click consent with context (who, what, why, evidence), SLAs, and auto‑fallback if unattended.

- Environment separation. Sandbox new agents, run “canary” sessions, and promote to production only with defined quality gates and safety test suites.

A practical architecture: signal, policy, and control

A robust pattern is to split the system into three cooperating planes:

- Signal plane (observability). Collect high‑fidelity telemetry from the agent framework, RAG layer, databases, data lakes, SaaS tools, and custom APIs. Normalize around a common schema (trace IDs, principals, resource URIs, sensitivity tags).

- Policy plane (decisions). Evaluate identity, purpose, contextual risk, and data/tool policies to yield allow/deny/approve with conditions. Log the decision and rationale.

- Control plane (enforcement). Enforce the decision at the data and action boundaries—rewriting queries, masking columns, constraining parameters, inserting approvals, or blocking outright.

Done right, these planes form a closed loop: observe → decide → enforce → observe the outcome. Over time, this loop produces the institutional memory to make agents both safer and more effective.

Where Datafi fits

Datafi was built on the premise that business information must be easier to find and safely use. In an agent‑first world, that translates to an enterprise data control plane coupled with action‑aware governance:

- Unified data visibility. Catalog sources, sensitivity, lineage, and usage so agents can request only what they truly need.

- Fine‑grained policy enforcement. Apply column/row‑level controls, dynamic masking, and purpose‑based access at runtime—no manual rewiring per agent.

- Context‑aware query rewriting. Translate natural‑language intents into governed queries that automatically respect entitlements and data residency.

- Action governance. Register tool/function capabilities with scopes, preconditions, and guardrails. Provide dry‑run and “shadow mode” to evaluate planned actions before they touch production.

- Evidence‑first responses. Require citations and schema validation, attach provenance to outputs, and store everything in an immutable audit ledger for replay and post‑mortems.

- Least‑privilege by default. Issue ephemeral credentials bound to identity, purpose, and time so even a compromised agent session has constrained blast radius.

The result is not just visibility into what agents did, but proactive control over what they can do with sensitive business data—exactly what enterprises need to move from pilots to production with confidence.

An adoption path you can start today

- Inventory & tag. Establish a living catalog of data sources, sensitivity levels, and owners. Tie datasets to business purposes.

- Instrument the runtime. Add tracing at the prompt, retrieval, tool, and data layers. Adopt a shared correlation ID.

- Codify policies. Write purpose‑based access rules, data masking patterns, and action preconditions as code. Version and test them.

- Introduce the control plane. Put Datafi (or an equivalent control gateway) in the data path and tool path. Start with read‑only controls and dry‑runs.

- Run in shadow mode. Compare agent plans and proposed actions with human ground truth; measure drift, hallucination risk, and data exposure.

- Turn on enforcement. Require approvals for high‑risk actions, then progressively relax as confidence grows. Keep reversible writes until error rates meet targets.

- Continuously learn. Feed incidents, user feedback, and success/failure signals back into policies, prompts, retrieval rules, and tool scopes.

Metrics that matter

Define success with concrete, decision‑centric measures:

- Mean time to explain (MTTX) any agent action

- % actions executed with attached evidence/citations

- Hallucination/validation failure rate prior to enforcement

- Sensitive data exposure averted (blocked or masked)

- Approval dwell time for high‑risk actions

- Cost per correct decision (compute + human time)

- Replay coverage: % of traces reproducible end‑to‑end

The bottom line

Autonomous agents will unlock enormous productivity, but autonomy without observability is recklessness at scale. Full AI observability—grounded in a data control plane, action governance, and policy‑as‑code—turns agents from opaque black boxes into trustworthy coworkers that are explainable, auditable, and controllable. By placing Datafi’s control plane between agents and your most sensitive systems, you gain the power not only to see what agents do with your business data, but to shape it—ensuring that the right things happen, every time.