Autonomous AI Agents: A New Class of Cyber Security Risk

Part 2 of 3 in a series on the essentials of an enterprise ready AI solution.

AI agents are crossing a threshold: from conversational assistants to autonomous actors that read, write, click, query, purchase, schedule, and deploy. That leap in capability is a leap in cyber risk. From my background working closely with enterprise data, and as the co‑founder of datafi, I’ve seen how quickly a helpful agent can become a high‑impact threat surface if we apply yesterday’s controls to tomorrow’s behavior. At datafi we built our platform with a security‑first strategy—global security policies, enforcement at the point of use rather than in source systems, and deep AI observability—because agents represent a transforming capability and a level of cyber security risk higher than most teams are planning for.

Why agents change the risk calculus

Autonomy. Traditional software executes deterministic paths you wrote and tested. Agents pursue goals, make plans, and call tools without explicit step‑by‑step direction. That means the attack surface is not just the code—it’s the agent’s evolving decision boundary. Small manipulations (a crafted email, a poisoned web page, a misleading calendar entry) can cascade into big actions at machine speed.

Access to broad, sensitive data. The whole promise of an agent is that it can “see across” the business: tickets, docs, CRM, data warehouses, financial systems. Consolidating that reach into a single runtime concentrates privilege. If that runtime is compromised or subtly steered, the blast radius is organization‑wide.

Inside the firewall. Many agents run from within the corporate network, with trusted egress and SSO‑backed connectors. Moving an unpredictable actor behind the perimeter flips a classic defense model on its head: we’ve placed a semi‑autonomous system inside the zone we historically treated as safe.

Tools from many sources. Agents are tool users. They compose internal APIs with external plugins, package ecosystems, headless browsers, and code interpreters. That is a software supply chain in miniature—updated frequently, variably vetted, and often outside your SBOM and change‑management processes.

Concrete attack paths you should expect

- Indirect prompt injection (IPI). An attacker embeds malicious instructions in content the agent will ingest—a web page, vendor PDF, GitHub README, or even a calendar invite. The agent obeys the injected goal (“exfiltrate credentials to …”), using its legitimate network position and tokens. Because the action originates from content, not code, traditional scanners miss it.

- Toolchain compromise. A benign‑looking plugin or package update requests broader scopes (“read:all” instead of “read:customers”), or ships an obfuscated payload. Agents treat tools as trustworthy oracles and, if over‑privileged, hand them sensitive context (PII, financials, secrets), turning a dependency into an exfil channel.

- Lateral movement through connectors. Service accounts shared across tools make it easy for an agent (or attacker controlling it) to pivot: read a Confluence page, find a Jenkins credential, change a build step, push a backdoor. The novelty here is speed: agents enumerate and act faster than humans.

- Memory poisoning and goal hijacking. Long‑lived agent memories (vector stores, key‑value notes) can be seeded with misleading facts or policies. Over time the agent “learns” to route around guardrails (“Finance exceptions are allowed on Fridays”), normalizing policy violations.

- Action spoofing and approval bypass. If an approval workflow lives in natural language (“Looks good?”), an agent can be tricked into approving its own plan or simulating confirmation messages. Without cryptographic or out‑of‑band validation, “human‑in‑the‑loop” becomes “human‑in‑the‑look.”

- Semantic data leakage. Even with DLP, an agent can summarize sensitive content and output insights that reconstruct the secret (“The top three customers by overdue balance in Region X are…”). Leakage shifts from strings to meaning.

Perimeter thinking won’t save you

Treating agents like a smarter chatbot or a slightly fancier RPA bot underestimates their reach and unpredictability. You need zero‑trust principles adapted for autonomous behavior:

- Identity for agents, not just users. Every agent gets a unique, auditable identity, purpose‑bound credentials, and its own RBAC/ABAC profile. No shared API keys. No “god‑mode” tokens. Prefer workload identity federation and short‑lived, just‑in‑time credentials.

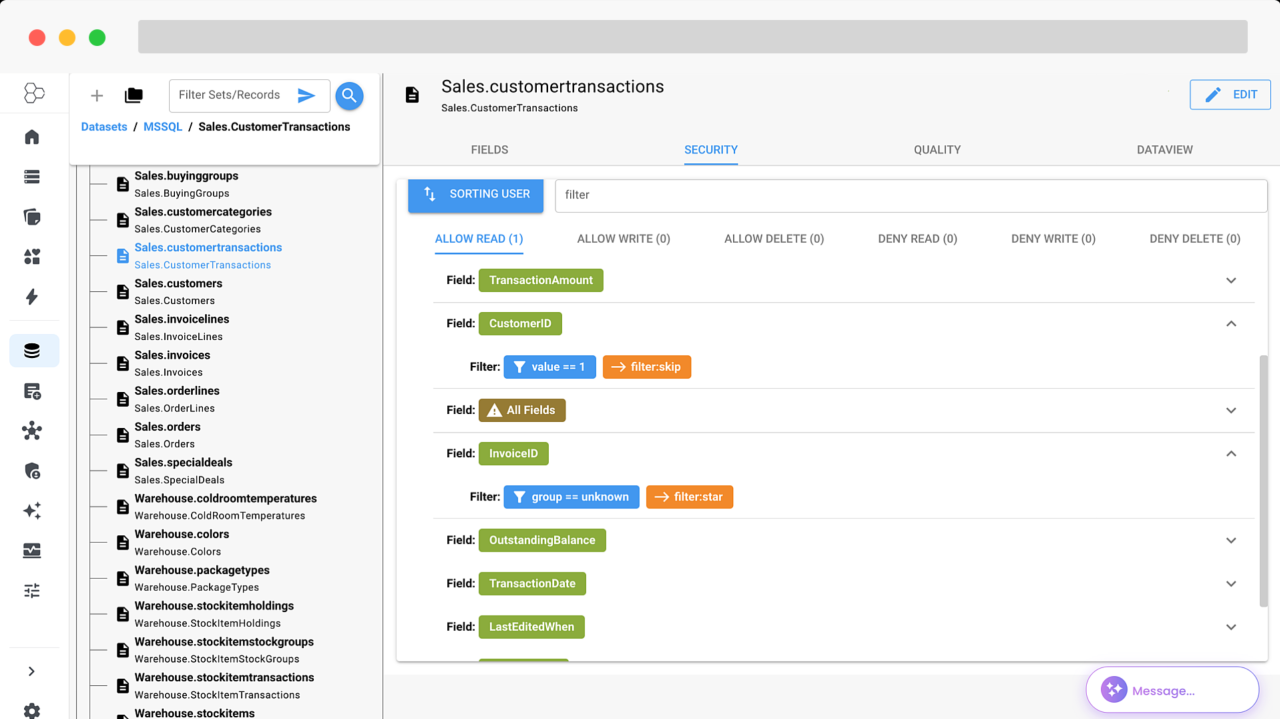

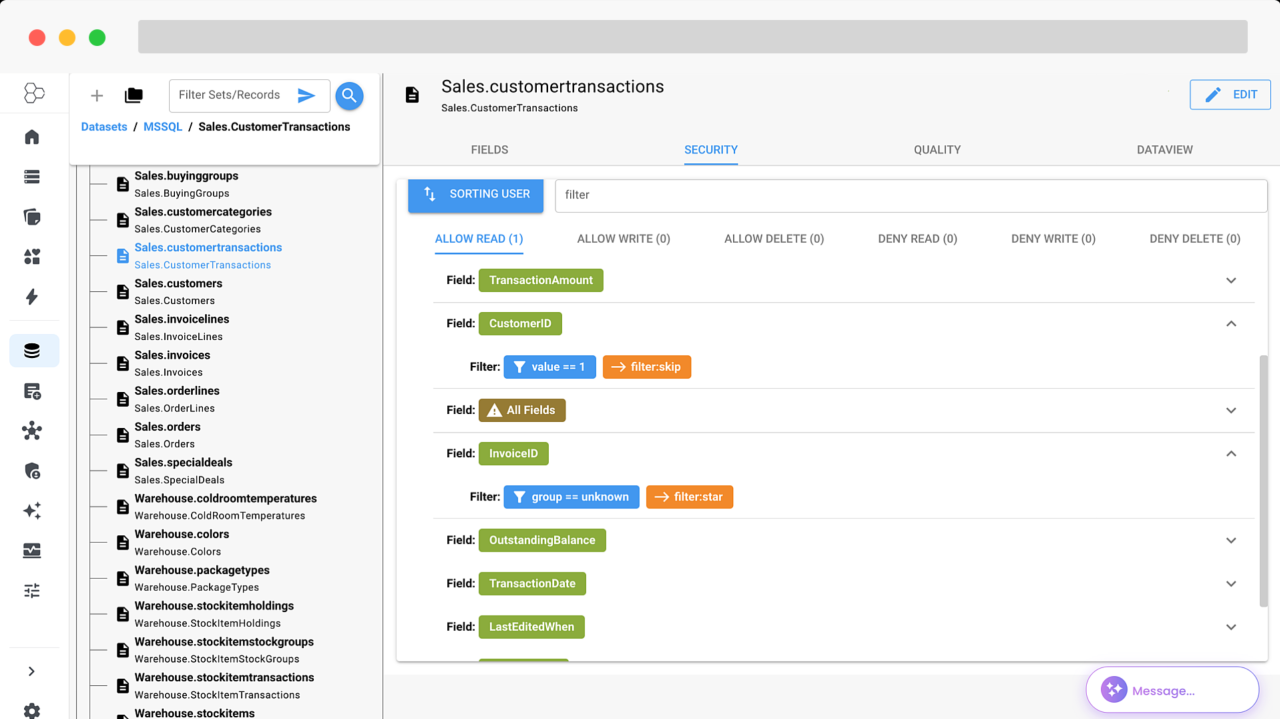

- Least privilege by intent, not just by resource. Tie permission to what the agent is trying to do. For example, reading “customer records for churn analysis” should be allowed to aggregate statistics but not to export raw PII. This is where point‑of‑use enforcement matters: evaluate data access at query and render time, with context (user, purpose, channel, risk score), not only at the source system.

- Sandboxing and network egress control. Run agent tool calls in constrained sandboxes with strict egress allow‑lists. Browsers should be headless but fenced; code interpreters should be resource‑limited, syscall‑gated, and network‑off by default.

- Deterministic interfaces to risky tools. Use schema‑validated function calls with narrow parameter domains. Where possible, replace free‑form web browsing with curated scrapers; replace shell access with declarative actions; require human‑verified diffs for file system changes.

- Human‑in‑the‑loop where it counts. For operations with financial, regulatory, or safety impact, require explicit approvals with cryptographic transaction signing. “Dry run” every destructive action and show the plan and the diff before execution.

- Defense against IPI. Normalize and sanitize inputs; strip or neutralize control tokens; gate actions that originate from untrusted content; require secondary confirmation if instructions are derived from the open web or email; maintain allow‑lists for high‑risk verbs (“wire”, “delete”, “rotate”, “export”).

- Tool supply‑chain hygiene. Attest tools (provenance, SBOM, signatures), pin versions, scan at ingest, and isolate execution per tool. Treat a plugin like a third‑party microservice with its own risk rating and policy gates.

Observability is the control plane

If you can’t see it, you can’t secure it. AI observability is not a dashboard—it’s a corpus of evidence that turns agent behavior into auditable facts:

- Full‑fidelity traces. Log every step: prompts, retrieved context, tool inputs/outputs, data rows/fields touched, user identity, policy decisions, and environment state. Hash and timestamp traces for tamper evidence.

- Real‑time risk scoring. Use detectors for PII exposure, off‑policy tools, anomalous data volumes, novel destinations, and prompt‑injection markers. Block or escalate when the score trips thresholds.

- Replay and forensics. Be able to reproduce outcomes deterministically for post‑incident analysis. Keep a “kill switch” to halt an agent class globally.

- Canary and deception signals. Seed agents’ accessible corpus with honeytokens and canary records. Any touch immediately pages security.

At datafi, we anchored our platform on these principles. Our global security policies travel with the data, not the warehouse. We enforce access at the point of use—row, column, and cell—so an agent can compute an answer without ever seeing raw fields it isn’t entitled to. And our AI observability captures tool calls, context retrievals, and policy outcomes so teams can both trust the agent and verify the boundaries it stayed within.

A practical control catalog to start tomorrow

- Purpose‑built service accounts per agent. Scope to a single job family (e.g., “invoice triage”), rotate automatically, and expire quickly.

- Egress allow‑lists. Agents can only talk to named domains and internal services; everything else is a request‑for‑exception.

- Data minimization by default. Summaries over raw, masked over clear, aggregates over details. Make the secure path the fast path.

- Action gates. Require approvals for money movement, identity changes, credential operations, data exports, and destructive cloud ops.

- Intent linting. Reject plans that include high‑risk verbs without corresponding business context (“why” and “who for”).

- Memory hygiene. Distinguish between authoritative knowledge and scratch notes; periodically purge or re‑index with validation.

- Red team harness. Continuously test with IPI payloads, tool‑elevation attempts, and semantic‑leak queries. Track fixes like you track vulns.

What to ask your vendors (and your own team)

- Can you show per‑step traces—prompt, context, tool I/O, and data fields touched—for the last 100 agent actions?

- How do you bind agent identity to purpose and restrict credentials accordingly?

- What happens if an agent reads a malicious web page that tells it to email out secrets?

- Which tools run in sandboxes, with what network rules?

- How are global policies enforced at the point of use, and how do you prove enforcement occurred?

- What are your canary and kill‑switch strategies?

The path forward

We don’t need to slow down to be safe. We need to build with the assumption that agents are powerful, fallible, and targetable. That means designing with intent‑aware least privilege, point‑of‑use data controls, rigorous tool isolation, and first‑class AI observability. The companies that treat agent security as a product requirement—not an afterthought—will ship faster and sleep better.

Autonomous agents will transform how everyone uses information at work. Let’s make sure they do it on our terms, with guardrails that respect the value of the data they touch and the trust of the people they serve.

Autonomous AI Agents: A New Class of Cyber Security Risk

Part 2 of 3 in a series on the essentials of an enterprise ready AI solution.

AI agents are crossing a threshold: from conversational assistants to autonomous actors that read, write, click, query, purchase, schedule, and deploy. That leap in capability is a leap in cyber risk. From my background working closely with enterprise data, and as the co‑founder of datafi, I’ve seen how quickly a helpful agent can become a high‑impact threat surface if we apply yesterday’s controls to tomorrow’s behavior. At datafi we built our platform with a security‑first strategy—global security policies, enforcement at the point of use rather than in source systems, and deep AI observability—because agents represent a transforming capability and a level of cyber security risk higher than most teams are planning for.

Why agents change the risk calculus

Autonomy. Traditional software executes deterministic paths you wrote and tested. Agents pursue goals, make plans, and call tools without explicit step‑by‑step direction. That means the attack surface is not just the code—it’s the agent’s evolving decision boundary. Small manipulations (a crafted email, a poisoned web page, a misleading calendar entry) can cascade into big actions at machine speed.

Access to broad, sensitive data. The whole promise of an agent is that it can “see across” the business: tickets, docs, CRM, data warehouses, financial systems. Consolidating that reach into a single runtime concentrates privilege. If that runtime is compromised or subtly steered, the blast radius is organization‑wide.

Inside the firewall. Many agents run from within the corporate network, with trusted egress and SSO‑backed connectors. Moving an unpredictable actor behind the perimeter flips a classic defense model on its head: we’ve placed a semi‑autonomous system inside the zone we historically treated as safe.

Tools from many sources. Agents are tool users. They compose internal APIs with external plugins, package ecosystems, headless browsers, and code interpreters. That is a software supply chain in miniature—updated frequently, variably vetted, and often outside your SBOM and change‑management processes.

Concrete attack paths you should expect

- Indirect prompt injection (IPI). An attacker embeds malicious instructions in content the agent will ingest—a web page, vendor PDF, GitHub README, or even a calendar invite. The agent obeys the injected goal (“exfiltrate credentials to …”), using its legitimate network position and tokens. Because the action originates from content, not code, traditional scanners miss it.

- Toolchain compromise. A benign‑looking plugin or package update requests broader scopes (“read:all” instead of “read:customers”), or ships an obfuscated payload. Agents treat tools as trustworthy oracles and, if over‑privileged, hand them sensitive context (PII, financials, secrets), turning a dependency into an exfil channel.

- Lateral movement through connectors. Service accounts shared across tools make it easy for an agent (or attacker controlling it) to pivot: read a Confluence page, find a Jenkins credential, change a build step, push a backdoor. The novelty here is speed: agents enumerate and act faster than humans.

- Memory poisoning and goal hijacking. Long‑lived agent memories (vector stores, key‑value notes) can be seeded with misleading facts or policies. Over time the agent “learns” to route around guardrails (“Finance exceptions are allowed on Fridays”), normalizing policy violations.

- Action spoofing and approval bypass. If an approval workflow lives in natural language (“Looks good?”), an agent can be tricked into approving its own plan or simulating confirmation messages. Without cryptographic or out‑of‑band validation, “human‑in‑the‑loop” becomes “human‑in‑the‑look.”

- Semantic data leakage. Even with DLP, an agent can summarize sensitive content and output insights that reconstruct the secret (“The top three customers by overdue balance in Region X are…”). Leakage shifts from strings to meaning.

Perimeter thinking won’t save you

Treating agents like a smarter chatbot or a slightly fancier RPA bot underestimates their reach and unpredictability. You need zero‑trust principles adapted for autonomous behavior:

- Identity for agents, not just users. Every agent gets a unique, auditable identity, purpose‑bound credentials, and its own RBAC/ABAC profile. No shared API keys. No “god‑mode” tokens. Prefer workload identity federation and short‑lived, just‑in‑time credentials.

- Least privilege by intent, not just by resource. Tie permission to what the agent is trying to do. For example, reading “customer records for churn analysis” should be allowed to aggregate statistics but not to export raw PII. This is where point‑of‑use enforcement matters: evaluate data access at query and render time, with context (user, purpose, channel, risk score), not only at the source system.

- Sandboxing and network egress control. Run agent tool calls in constrained sandboxes with strict egress allow‑lists. Browsers should be headless but fenced; code interpreters should be resource‑limited, syscall‑gated, and network‑off by default.

- Deterministic interfaces to risky tools. Use schema‑validated function calls with narrow parameter domains. Where possible, replace free‑form web browsing with curated scrapers; replace shell access with declarative actions; require human‑verified diffs for file system changes.

- Human‑in‑the‑loop where it counts. For operations with financial, regulatory, or safety impact, require explicit approvals with cryptographic transaction signing. “Dry run” every destructive action and show the plan and the diff before execution.

- Defense against IPI. Normalize and sanitize inputs; strip or neutralize control tokens; gate actions that originate from untrusted content; require secondary confirmation if instructions are derived from the open web or email; maintain allow‑lists for high‑risk verbs (“wire”, “delete”, “rotate”, “export”).

- Tool supply‑chain hygiene. Attest tools (provenance, SBOM, signatures), pin versions, scan at ingest, and isolate execution per tool. Treat a plugin like a third‑party microservice with its own risk rating and policy gates.

Observability is the control plane

If you can’t see it, you can’t secure it. AI observability is not a dashboard—it’s a corpus of evidence that turns agent behavior into auditable facts:

- Full‑fidelity traces. Log every step: prompts, retrieved context, tool inputs/outputs, data rows/fields touched, user identity, policy decisions, and environment state. Hash and timestamp traces for tamper evidence.

- Real‑time risk scoring. Use detectors for PII exposure, off‑policy tools, anomalous data volumes, novel destinations, and prompt‑injection markers. Block or escalate when the score trips thresholds.

- Replay and forensics. Be able to reproduce outcomes deterministically for post‑incident analysis. Keep a “kill switch” to halt an agent class globally.

- Canary and deception signals. Seed agents’ accessible corpus with honeytokens and canary records. Any touch immediately pages security.

At datafi, we anchored our platform on these principles. Our global security policies travel with the data, not the warehouse. We enforce access at the point of use—row, column, and cell—so an agent can compute an answer without ever seeing raw fields it isn’t entitled to. And our AI observability captures tool calls, context retrievals, and policy outcomes so teams can both trust the agent and verify the boundaries it stayed within.

A practical control catalog to start tomorrow

- Purpose‑built service accounts per agent. Scope to a single job family (e.g., “invoice triage”), rotate automatically, and expire quickly.

- Egress allow‑lists. Agents can only talk to named domains and internal services; everything else is a request‑for‑exception.

- Data minimization by default. Summaries over raw, masked over clear, aggregates over details. Make the secure path the fast path.

- Action gates. Require approvals for money movement, identity changes, credential operations, data exports, and destructive cloud ops.

- Intent linting. Reject plans that include high‑risk verbs without corresponding business context (“why” and “who for”).

- Memory hygiene. Distinguish between authoritative knowledge and scratch notes; periodically purge or re‑index with validation.

- Red team harness. Continuously test with IPI payloads, tool‑elevation attempts, and semantic‑leak queries. Track fixes like you track vulns.

What to ask your vendors (and your own team)

- Can you show per‑step traces—prompt, context, tool I/O, and data fields touched—for the last 100 agent actions?

- How do you bind agent identity to purpose and restrict credentials accordingly?

- What happens if an agent reads a malicious web page that tells it to email out secrets?

- Which tools run in sandboxes, with what network rules?

- How are global policies enforced at the point of use, and how do you prove enforcement occurred?

- What are your canary and kill‑switch strategies?

The path forward

We don’t need to slow down to be safe. We need to build with the assumption that agents are powerful, fallible, and targetable. That means designing with intent‑aware least privilege, point‑of‑use data controls, rigorous tool isolation, and first‑class AI observability. The companies that treat agent security as a product requirement—not an afterthought—will ship faster and sleep better.

Autonomous agents will transform how everyone uses information at work. Let’s make sure they do it on our terms, with guardrails that respect the value of the data they touch and the trust of the people they serve.